AGI is Too Quick

You don’t normally hear a developer saying such things, but it’s true with generative AI. Notwithstanding the oft-seen sluggishness of OpenAI’s APIs, large language models (LLMs) are quick out of the blocks to give you a response. Too quick.

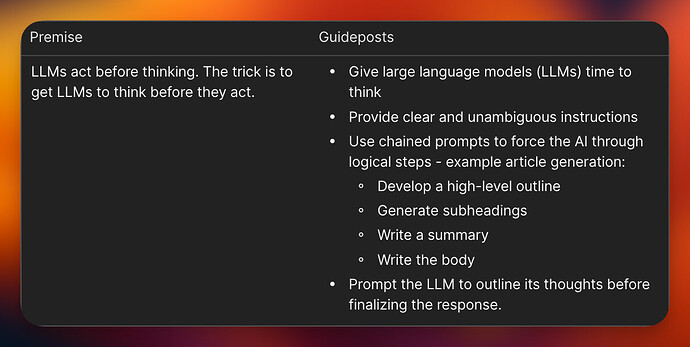

LLMs speak before they think. You need to get them to think before they speak.

In today’s fast-paced business world, it is more important than ever to stay ahead of the curve when it comes to technology. One of the most exciting developments in recent years has been the emergence of AI solution architectures, which can help businesses automate routine tasks, identify patterns in data, and provide insights that can inform strategic decision-making.

In this article, I’ll make it obvious how to reap the benefits of using Coda AI to create something like an article with a fair degree of control over the response.

The Importance of AI Solution Prompts

As technology continues to advance, it has become increasingly important for businesses to adapt and incorporate AI solution architectures into their operations. The benefits of doing so are numerous, including increased efficiency, cost savings, and improved decision-making capabilities. However, implementing these systems can be complex and challenging.

Prompts are the pathway to getting it right. But your words are also your programming code. Often, there are hundreds of ways to prompt for answers that are poor, and only a few ways to get it right.

Understanding Coda AI

Coda AI is a powerful tool that can help businesses overcome these challenges. As you probably know by know, it provides a platform for building and deploying AI solutions quickly and easily. By leveraging machine learning and natural language processing, Coda AI can automate routine tasks, identify patterns in data, and provide insights that can inform strategic decision-making.

It’s vastly open to everyone’s interpretation of AI solutions and ways they might deal with an AI feature. This is a blessing and a curse, because now you need to compose commands and queries using words. Words have meaning - use them wisely. ![]()

Productivity Boosting with Coda AI

One of the most significant benefits of Coda AI is its ability to streamline workflows and increase productivity. By automating repetitive tasks, employees can focus on more strategic work that requires their unique skills and expertise. Additionally, Coda AI can help identify areas where processes can be improved, leading to even greater efficiency gains.

At the core of the gold-rush to leveraging AI for grater productivity is to get it to write for us. If not the entire article, at least the basic stuff so we can quickly add clarity, or remove hallucinations.

Many users have already expressed dissatisfaction of Coda AI as they learn just how difficult it can be to get favorable results from AI features. The simple stuff tends to work well, such as summarizing, or extracting key terms. However, writing a brief or research paper is just dang difficult.

LLMs Require Smart Prompts

Coda AI is also well-suited for building complex prompts. By leveraging its natural language processing capabilities, Coda AI can help automate content creation with relatively good control. This can save businesses significant time and resources, as well as improve the quality of their documents. But… it needs to be carefully guided to deliver on this promise.

Most of this article was generated using Coda AI. This is the prompt I used.

Note the multiple [Output] tags. Each one is an inference deliverable and they are often dependent on earlier outputs. This causes the LLM to perform each step in a serial fashion (i.e., we’re getting it to think before it writes some of the final passages of the article).

This is a simple way to create chained AI workflows.

The bold items are abstracted references to table cells that contain additional text that the prompt uses to guide the AI to a successful response. I mention prompt abstractions in this article.

The Future of Coda AI

Looking ahead, the potential applications for Coda AI are virtually limitless. As the technology continues to advance, it will become even more powerful and versatile, enabling businesses to achieve even greater efficiencies and insights. However, it is important to approach its implementation thoughtfully and strategically - every word counts. ![]()