Generative AI has a PR problem.

The vast majority of ChatGPT users have been misled into thinking AI is often stupid, especially with regard to numbers. Indeed, generative AI cannot perform math with precision because it is designed to do only one thing -

Predict the next word based on a collection of words, specifically tokens.

When math is involved, it goes off the rails quickly.

While the LLM knows about numbers like 22, zero, and 8 – it has no concept of zero, 8022, or 2208 because these four character [number] values resolve to three different tokens in the LLM - zero, 8, and 22. While OpenAI has been actively adjusting its models to support math with greater precision, it remains untrustworthy in some instances.

A computation that works:

22080 * 3 + 1

A computation that fails:

22080 * 32 + 1

The first computation is correct (66,241). The second example fails - it responds with 704,641. The correct answer is 706,561. Bottom line: LLMs cannot perform simple math in every case.

Token lengths (i.e., long numbers) are why generative AI cannot perform reliable computations. This behavior needs to be explained to uninformed users who have come to expect computers, large and small, to get basic math right every time. But if they understood the purpose of generative AI, it would soften this deep disappointment.

But, there are many cases where generative AI is very accurate. Unfortunately, three things stand in the way of becoming an AI-first developer in the creation of no-code and low-code solutions.

- You already don’t trust it.

- You don’t put in the work to become a skilled, prompt engineer.

- You won’t take the time to test your generative AI outputs.

If you did these things, you would accelerate your solution-building efforts. You would also craft features and capabilities that were heretofore considered impractical. You will need this competitive advantage as no/low-code merges with generative AI in the not-too-distant future.

Sidebar: No-code and AI will soon vanish. In its place will be #gen-apps.

More on this another time.

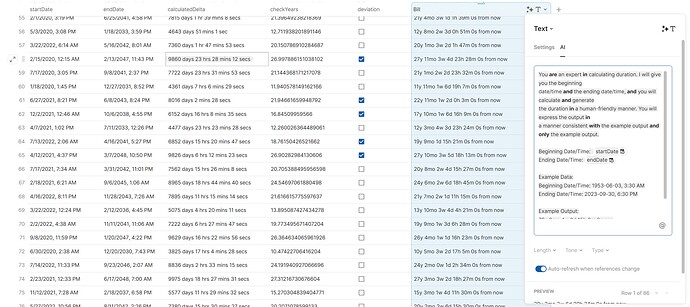

In a recent thread, participants claimed generative AI wasn’t precise enough to format numbers into the EU equivalent - i.e., $2,192.45 → $2.192,45. This document demonstrates the prompt I used applied to 1,080 random tests. The results are perfection.

So, why does this work reliably when we just established that generative AI cannot handle large numbers with any predictability?

Answer: Strings

It is skilled at this task if you ask generative AI to do something with a string. You can even refer to it as a number in the prompt, and the instructions (i.e., the term “format”) will cause it to be treated as a string.

Admittedly, in this case, there are many benefits to be had if we could perform math and CFL algorithms in native EU numbers. This approach requires all computations to exist in US format and use AI to render EU formats. However, this story is more than the EU formatting - it demonstrates that generative AI can be deterministic and eliminate complexities while reducing time-to-production.

I advise spending the time to do the work to become an AI-first builder of solutions. It’s undoubtedly the future, and the people who want your job will be doing the work.